From Guesswork to Growth: A Practical Creative Testing Roadmap

Passionate content and search marketer aiming to bring great products front and center. When not hunched over my keyboard, you will find me in a city running a race, cycling or simply enjoying my life with a book in hand.

Ad-hoc creative testing often produces noisy results.

When multiple variables change at once, performance moves but the reason stays unclear, leading to wasted spend and slow learning. High-performing paid media teams avoid this by treating creative testing as an experimentation system with defined hypotheses, budgets, and review cycles.

In this guide, you’ll learn how a creative testing roadmap brings structure to creative experiments, clarifies weekly priorities, and turns test results into confident scaling decisions.

TL;DR

- A creative testing roadmap turns testing into a system by defining what to test, in what order, and with what success criteria.

- Sequencing tests by layer (concepts, hooks, then variations) increases learning velocity and reduces false winners.

- Protecting test budgets and using a weekly cadence keeps experiments clean and comparable.

- Clear pre-defined benchmarks make it easier to decide when to iterate, scale, or cut creatives.

- Teams that feed learnings back into the roadmap compound performance instead of restarting from scratch.

What Is a Creative Testing Roadmap

A creative testing roadmap is a forward-looking plan that defines which creative experiments will run, when they’ll launch, how much budget they’ll receive, and how success will be measured.

Instead of reacting to performance swings, teams use a roadmap to sequence tests deliberately, ensuring each experiment answers a clear question about audience motivation or message fit.

A roadmap typically outlines:

- The specific variable being tested, such as concept, hook, or execution.

- The timeline and duration for each test cycle.

- Budget allocation and success metrics agreed on before launch.

Unlike random testing, this structure prevents multiple variables from changing at once, making it easier to understand why performance moves and which insights are worth scaling.

The result is faster learning, cleaner data, and creative decisions driven by evidence rather than short-term spikes.

Core Components of a Creative Testing Roadmap

A strong creative testing roadmap breaks testing into clear layers, so teams learn what drives performance before moving on to execution details.

By isolating each layer, marketers can identify which ideas deserve more investment and which should be cut early.

Concept Testing

Concept testing focuses on the core promise behind an ad, such as a pain point, desired outcome, or belief the audience already holds.

This is where teams validate what actually motivates action before investing in execution. Because the concept shapes the entire message, it typically has the largest impact on performance and should be tested before any smaller creative tweaks.

Strong concept tests answer questions like which problem resonates most or which outcome feels most compelling, giving teams a clear direction for future iterations.

Hook Testing

Hook testing isolates the opening seconds or headline to understand what stops the scroll.

By holding the underlying concept constant and only changing the hook, teams can pinpoint which angles grab attention early. This helps separate attention problems from messaging problems, preventing premature changes to ideas that may still have demand.

Effective hook testing improves learning speed and ensures strong concepts are not killed due to weak openings.

Variation Testing

Variation testing explores execution details once a concept proves demand.

This includes changes to visuals, CTAs, captions, creators, or formats, all while keeping the core message intact. The goal is to extend the lifespan of winning ideas without introducing new strategic variables that complicate analysis.

Variation testing helps teams scale what works by adapting execution to different audiences, placements, or fatigue signals, rather than restarting from zero.

Creative Testing Frameworks

A creative testing roadmap becomes more effective when teams apply lightweight frameworks to guide what gets tested first and why.

The following frameworks reduce guesswork and ensure every experiment is tied to a clear learning goal.

Hypothesis Driven Testing

Hypothesis driven testing turns creative ideas into testable statements instead of gut-driven guesses.

Each experiment is framed as a simple cause-and-effect hypothesis that links a specific message to a specific outcome. This forces teams to be explicit about why they believe a creative will work before it ever launches.

How to implement it in practice:

- Start every test with a single hypothesis written in plain language.

- Tie the hypothesis to one primary metric.

- Design the test so only one variable changes.

Example: “If we highlight the time-saving benefit in the first three seconds of the ad, then CTR will increase for cold audiences.”

To test this, the team:

- Keeps the concept and visuals the same.

- Produces two versions that differ only in the opening hook.

- Measures CTR as the success metric.

After the test, results are evaluated against the hypothesis, not just raw performance. Even a losing test produces a clear takeaway about what did not resonate, which feeds the next roadmap decision.

Prioritization Frameworks

Prioritization frameworks help teams decide what to test first when ideas outnumber budget and bandwidth.

Instead of debating opinions, concepts and hooks are scored against a consistent set of criteria. This ensures testing resources are spent on ideas most likely to generate meaningful learning or performance gains.

How to implement it in practice:

- List all proposed concepts or hooks for the next test cycle.

- Score each idea using a simple framework like ICE or PIE.

- Rank ideas by total score and test the top candidates first.

Example using ICE:

A team evaluates three new concepts:

- Concept A: New pain-point angle

Impact: High

Confidence: Medium

Effort: Low - Concept B: Creator testimonial remix

Impact: Medium

Confidence: High

Effort: Medium - Concept C: New format experiment

Impact: High

Confidence: Low

Effort: High

After scoring, Concept A ranks highest and earns the first test slot. Lower-scoring ideas are not discarded, but queued for later once stronger signals are validated.

By applying prioritization frameworks consistently, teams avoid chasing shiny ideas and keep their creative testing roadmap focused on the highest-value experiments.

Weekly Testing Cadence

A creative testing roadmap only works when paired with a consistent testing rhythm.

Most performance teams rely on weekly cycles to balance speed, platform learning, and reliable decision-making.

Weekly testing cadences typically follow a simple loop.

- Launch a small set of new creative tests at the start of the week.

- Allow platforms enough time to exit the learning phase.

- Review results against predefined success metrics.

- Queue winners for iteration or scale, and document learnings.

Running too many tests at once slows learning and muddies results. Most experimentation frameworks recommend focusing on one testing layer per cycle, such as concepts one week and hooks the next, to keep insights clean and actionable.

Budget Allocation for Creative Tests

Reliable creative insights depend on protecting test spend from the pressures of scaling.

When budgets aren’t clearly defined, tests are often underfunded or overtaken by performance campaigns. As a result, creatives fail to exit learning phases, results become noisy, and teams end up reacting to short-term ROAS instead of true creative signal.

High-performing teams avoid this by separating testing and scaling budgets from day one. Dedicated test spend ensures each concept receives enough delivery to be evaluated fairly and compared consistently over time.

Once a creative demonstrates repeatable performance, it can move into scaling campaigns with increased investment. This structure preserves the integrity of experiments, prevents premature optimization, and creates a dependable system for creative evaluation. For a deeper breakdown of how to structure test budgets, this guide on ad testing explains why isolating spend leads to clearer decisions.

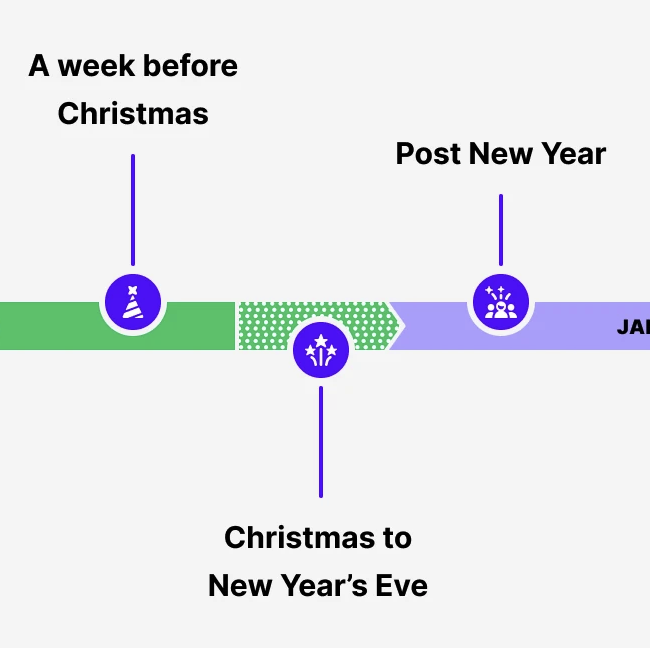

From Christmas To Valentine’s Day

Turning Results Into Scaling Decisions

Testing only matters if results translate into disciplined, repeatable decisions.

Before any creative goes live, teams should align on what success looks like – whether that’s CPA, CTR, or early engagement signals. Defining these benchmarks upfront eliminates hindsight bias and keeps evaluations objective once data comes in.

When a creative hits its targets, the next move isn’t aggressive budget increases. Instead, top teams expand horizontally first, applying the winning concept to new hooks, creators, formats, or placements to validate that performance holds across variations.

Vertical scaling comes later, once multiple executions prove consistent. This staged approach limits downside risk, protects spend, and turns isolated wins into durable growth through structured iteration.

Feeding Learnings Back Into the Roadmap

A creative testing roadmap should evolve with every test cycle, not sit unchanged.

Each round of results should directly influence what gets tested next. Strong performers point the way to new hooks or variations, while underperforming tests clarify which messages, formats, or angles to move away from. This feedback loop allows teams to build on real signal instead of restarting from zero each week.

When results begin to slip, the issue is often creative fatigue – not bidding or audience strategy. Rather than making minor tweaks, high-performing teams treat these moments as prompts to explore new concepts or angles.

By continuously updating the roadmap with real performance learnings, teams sustain momentum, avoid redundant testing, and compound gains over time.

Creative Testing Roadmap Summary

A creative testing roadmap replaces guesswork with a repeatable system for learning what actually drives performance.

By defining what to test, when to test it, and how to act on results, teams align creative production with paid media execution instead of reacting to short-term swings. Structured testing improves learning velocity, reduces wasted spend, and creates clearer paths to scale.

The next step is simple: document your current testing layers, lock in a weekly cadence, and protect budget for experimentation. From there, let each test feed the next one and allow performance to compound over time.

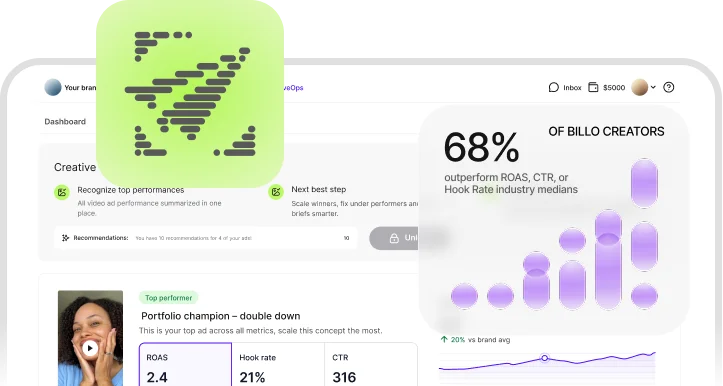

Explore Billo to source creators and creatives for a smooth creative testing roadmap.

FAQs

How many creatives should I test per week?

Most performance teams test a small, focused batch each week rather than flooding the account. Testing a limited number of creatives allows platforms to exit learning and produces clearer insights about what actually drives performance.

How long should a creative test run?

Creative tests should run long enough to gather stable delivery and meaningful data. Ending tests too early increases the risk of false winners, while letting them run through the learning phase improves decision quality.

What metrics matter most when evaluating creative?

The right metric depends on the test goal, but teams often use CPA, CTR, or early engagement signals like hook retention. Defining success metrics before launch keeps evaluations objective and consistent across test cycles.

SEO Lead

Passionate content and search marketer aiming to bring great products front and center. When not hunched over my keyboard, you will find me in a city running a race, cycling or simply enjoying my life with a book in hand.

Authentic creator videos, powered by real performance data

22,000+ brands use Billo to turn UGC into high-ROAS video ads.

Why Repurposing Winning Ad Concepts Beats Consta...

Repurposing winning ad concepts changes how performance teams scale creative. [...]...

Read full articleTesting Ad Variations: How to Consistently Lift ...

Most performance gains come from better creative, not targeting tweaks. [...]...

Read full articleAI‑Driven Creative Coaching: How Machine Learn...

Creative is no longer just a variable – it’s the [...]...

Read full article