From Avatars to Authenticity: Navigating the 2025 Wave of AI Generated UGC

Billo is a data-driven creator marketing platform that turns creator videos into trackable, scalable ads across Meta, TikTok, and YouTube Shorts. Built Billo from zero to 34k+ brands and hundreds of thousands of ads, driving $500M+ in tracked purchase value. I focus on systems, clarity, and execution.

That UGC ad you just watched? The person might not exist and it’s getting harder to tell.

In 2024 the U.S. FTC outlawed testimonials “by someone who does not exist,” slapping a $51 k fine on every undisclosed fake reviewer . Meanwhile, provenance tags such as C2PA “Content Credentials” are racing toward ISO-22144 adoption, promising cryptographic origin trails for every pixel by 2026 .

As AI tools flood feeds with polished but synthetic clips, authenticity itself is becoming a premium product. Let’s explore unpack how AI generated UGC reshapes conversion metrics, exposes platform blind spots, and invites a coming clamp-down. All that so you can understand the ground shifting beneath every scroll.

TL;DR

- Synthetic UGC converts like human content – a labeled AI testimonial can still convert for now, but the future is not clear.

- Detection tools can’t keep up – even minor edits drop deepfake accuracy to coin-flip levels, and most platforms don’t flag video or audio.

- Regulators are stepping in fast – the FTC now fines undisclosed synthetic endorsements up to $51,744 each; the EU mandates AI labels at first exposure.

- Provenance tech is going mainstream – C2PA-backed Content Credentials are headed for ISO standardization by 2026, embedding trust into every frame.

- Authenticity is gaining value – as perfect fakes flood the feed, verified human voices stand out and build lasting trust.

- AI creators bring scale, people bring emotional impact – brands are using both to balance control and connection.

- Meta’s tools now automate end-to-end ad creation – from AI actors to targeting, the one-click ad factory is already here.

Synthetic Realism Keeps Pace with Human Persuasion

The conversion gap between human and machine spokespeople has closed. If it feels authentic, buyers act.

I’ve been watching the numbers shift in real time. A 2025 randomized study found that AI-written policy messages can move opinions by 9.7 percentage points. And slapping an “AI-generated” label on them doesn’t blunt that punch at all.

Out in the market, the sentiment echoes the lab: 72% of marketers now report that social posts created with generative tools outperform human-only content**. Yet the hunger for the real thing hasn’t faded – 86% of consumers still gravitate toward brands that feel “authentic & honest” online. Translation: synthetic copy already persuades at scale, but authenticity is still the coin of the realm.

The data tells a simple story: AI generated UGC can persuade as effectively as flesh-and-blood creators, yet its very success intensifies the trust crunch. The more perfect fakes flood the feed, the more valuable a verified human voice (or a verified synthetic label) will become.

Spotting the Fakes: Why Detection Still Lags

I keep hearing, “Don’t worry – platforms will catch the synthetics.”

Hard truth: they aren’t close.

A 2025 arXiv benchmark ran today’s “state-of-the-art” deep-fake detectors through one round of basic post-processing; accuracy collapsed to 52%, aka coin-flip odds . Even the platform gatekeepers admit the gap.

Meta’s own documentation says its automatic “AI content” labels cover still images only. Meaning video and audio sail straight past the filters (Cybernews report). In other words, the infrastructure meant to police AI generated UGC is years behind the tools that create it, leaving our feeds wide-open to convincing but untraceable fakes.

Until detection tech levels up, every synthetic spokesperson we release shapes buyer trust (and regulators’ cross-hairs) long before anyone can prove they weren’t human.

The Regulatory Clamp-Down

In August 2024, the U.S. FTC finalized a rule banning testimonials “by someone who does not exist,” empowering enforcement actions with civil fines of up to $51,744 for each undisclosed synthetic review .

Meanwhile, the EU’s AI Act (Regulation EU 2024/1689, Article 50) now mandates clear labeling of deep-fake and AI-generated content at first exposure. Meaning any brand that deploys synthetic spokespeople must disclose them upfront or face penalties under European law .

These moves aren’t isolated skirmishes; they signal a broader shift toward holding marketers and the platforms they use accountable for transparency in the age of perfect-looking fakes.

Content Provenance Tech

I’ve watched provenance move from buzzword to build-in. In January 2025, the NSA, ACSC, CCCS, and NCSC issued joint guidance urging early adoption of C2PA’s Content Credentials, embedding cryptographic provenance data into every image, video frame, and audio track .

Even more significant, this framework has been fast-tracked as ISO CD 22144, with full publication expected in 2026 . What this means is that soon, every synthetic asset can carry an unbroken chain of custody. Making “Who made this?” as answerable as “When was it made?”

Provenance tech is set to eventually redraw the rules of content trust, turning every pixel into an audit trail.

Trust-Whiplash & the Authenticity Premium

I call it “trust-whiplash”: that split-second when audiences realize their feeds are filled with perfect fakes and default to skepticism.

In a recent Pew briefing on social trust, adults under 30 now trust social-media information nearly as much (52%) as national news outlets (56%), yet 86% of consumers say they favor brands that feel “authentic & honest” online.

That tension is already creating an authenticity premium: in a sea of flawless synthetics, genuinely human-made content will cut through the noise, earning more engagement and likely commanding higher ad budgets.

Brands that double-down on real voices now will build the trust reserve they need when skepticism spikes.

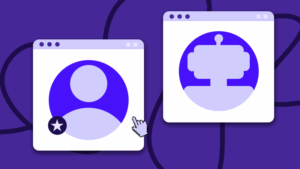

Synthetic Stars vs Human Creators

We’ve reached the fork in the feed. On one side: human creators with real lives, schedules, and reputations. On the other: synthetic personas who never sleep, never misstep, and never ask for a raise.

Brands are already placing bets. Virtual influencers like Imma (Japan) and Aitana (Spain) are signing with Porsche, BMW, and Amazon Fashion. Not for authenticity, but for consistency and control. They’re immune to drama, legal blowback, or viral missteps. They show up on time and on-brand. Every time.

This isn’t a replacement; it’s a divergence. Human creators still drive cultural resonance. But synthetic spokespeople are opening up entirely new lanes: round-the-clock campaigns and instant localization. The tradeoff isn’t emotion, it’s scalability.

As brands choose between the trust of the human and the utility of the synthetic, the smartest ones are already building hybrid rosters.

Meta’s One-Click Ad Factory

As one of the industry giants, Meta isn’t just tolerating synthetic UGC – it’s industrializing it. I’ve seen the direction firsthand: automation at every layer, from script to synthetic actor to paid placement.

In 2024, Meta rolled out AI Studio, a platform that lets brands generate AI influencers trained on a brand’s voice and tone, ready to deploy across Messenger, WhatsApp, and Instagram.

Paired with the company’s generative ad tools for instant copy, visuals, and targeting, this creates what’s effectively a one-click ad factory. And it’s not theoretical: Meta has already tested “digital personas” of public figures like MrBeast and Snoop Dogg, putting synthetic interaction directly in the feed.

Meta’s goal is clear: reduce ad production cost to near zero, then scale with AI-native creative. For brands, this unlocks near-infinite iteration, but also introduces real risk if synthetic assets are deployed without oversight or proper disclosure. And how will that effect users with their feed full of AI content?

Final Take: Scale Smart, Signal Real

Synthetic realism isn’t coming, it’s here. AI generated UGC now converts like the real thing, slips past most detection tools, and spreads faster than regulators can react. But with C2PA-backed provenance tech on the rise and fines hitting $51k per fake testimonial, the wild west window is risky.

What wins next isn’t just speed or volume – it’s trust strategy. Use synthetic creators where scale makes sense, but anchor your brand in transparent provenance, human-aligned values, and clear disclosure.

Because in a future where everything looks real, proving it is the new creative edge.

CEO & Co-founder @ Billo

Billo is a data-driven creator marketing platform that turns creator videos into trackable, scalable ads across Meta, TikTok, and YouTube Shorts. Built Billo from zero to 34k+ brands and hundreds of thousands of ads, driving $500M+ in tracked purchase value. I focus on systems, clarity, and execution.

Authentic creator videos, powered by real performance data

22,000+ brands use Billo to turn UGC into high-ROAS video ads.

How To Maintain Brand Consistency with At Scale...

When creator videos roll in fast, brand consistency can break [...]...

Read full articleCommon UGC Brief Mistakes Brands Still Make in...

A vague or overpacked brief derails campaigns before they start, [...]...

Read full articleOrganic UGC vs Paid UGC: How Top Brands Drive Gr...

More brands are turning to user-generated content (UGC) to fuel [...]...

Read full article